This is a bit of a deviation from my usual post. You can thank Brent Ozar for that. He decided to host Adam Machanic‘s T-SQL Tuesday this month. Since Brent Ozar Unlimited‘s newsletter is about the only one I read on a daily basis (and that even includes any racing-related ones I get!), I noticed it and thought I’d participate.

This is a bit of a deviation from my usual post. You can thank Brent Ozar for that. He decided to host Adam Machanic‘s T-SQL Tuesday this month. Since Brent Ozar Unlimited‘s newsletter is about the only one I read on a daily basis (and that even includes any racing-related ones I get!), I noticed it and thought I’d participate.

Brent’s topic is Microsoft Connect items. The one I chose is… well, let’s call it a pi-in-the-sky feature request. Ok, no more puns, I promise!

Ah, yes, SQL Server on Raspberry Pi… Once the Linux news came out, I think it’s safe to say it was only a matter of time before we saw a Connect suggestion like this. What I’d like to do is go through a little thought exercise on why it would indeed be a good idea, why Microsoft didn’t bake it in already, why it doesn’t work, and what needs to be done to get it there.

By the way, the Connect item also references R. I’m going to pretend for now that I didn’t see that. Maybe somebody like Ginger Grant will take a crack at it.

Once again, this isn’t my usual blog post. Typically, if I pose a question, I’ll give the answer. In this case, though, I don’t have all the answers. My hope here is simply to get a conversation going. So…

Is It A Good Idea?

This is basically a feature request. There are a lot of factors that go into the decision to add a feature to a product. One that is vital, but sometimes overlooked, is whether or not it fits within the overall theme of the product. It could be argued that small devices, in general, are not really a market for SQL Server. But I think Microsoft settled that argument when they announced Linux support. This is less about compact devices and more about running on one of the most popular Linux platforms.

Another factor is adoption. No one wants to add a feature that doesn’t get used. What would SQL Server on Pi even be used for? Truthfully, I really don’t know. But that’s the beauty of Raspberry Pi. The foundation’s initial goal was for use in education and developing countries, but it has found uses far beyond the original target markets. There are a lot of hobbyists out there, experimenting with Raspberry Pi. I’ll bet quite a few of them interact with SQL Server in their day job. So, rather than ask why Microsoft should add this feature, should we ask why not? I think this is a classic case of putting it out there and just waiting to see what happens.

Why Doesn’t Microsoft Support It Already?

I know only one answer to this question, and it drives the rest of this post. But first, let’s be clear – Microsoft put SQL Server on Linux to compete with Oracle. I don’t think anyone’s questioning that rationale. So, something like Raspberry Pi, while it may be a “nice to have,” is not on the v1 list of requirements.

But Raspberry Pi is just a platform for Linux, and SQL Server runs on Linux, so what’s the problem? Well, there may be others, but there is one absolute show-stopper. SQL Server’s minimum memory requirement on Linux is 3.25gb. The Raspberry Pi 3 Model B comes with 1gb. Which leads us to…

Why Doesn’t It Work?

Here we have an impasse. You can’t add memory to Pi. Well, you could, but it’s going to involve a soldering iron, hardware schematics, probably some assembly language skills… effectively, though, you can’t. You get what you get, and right now you get 1gb at most.

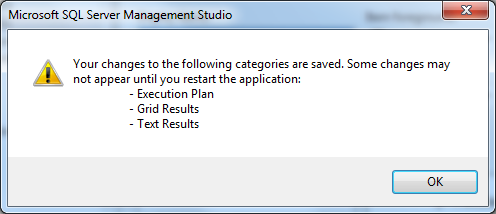

As for SQL Server, it’s not an empty requirement. It won’t install on less than 3.25gb. And once you install it, if you then lower the amount of memory, it won’t start. Ironically, I used an Oracle product, VirtualBox, to test this.

The reasons for this requirement are a bit cloudy to me. If you research memory use in SQL Server, you find two types of results. First, you have the question of just how much memory SQL Server can use effectively, on the high end. That’s not exactly our problem.

Second, though, you have the issue of how SQL Server uses memory. It has its own memory manager. When you configure it, you give it a constraint – how much memory to use – and it does its own thing inside this constraint. Incidentally, it’s surprising the number of Google search results are along the lines of “Why is SQL Server using all of my memory?” Well, because you allowed it to!

So, approaching SQL Server’s memory usage from the angle of “How can I get it to use as little as possible?” is a bit outside the norm. On that barebones Ubuntu VM of mine, I can see an idle SQL Server only consuming about 700mb. Yes, that’s while idle, and SQL Server will need room to do its job, but that’s a far cry from 3.25gb. Incidentally, it’s also consuming about 2.7gb of virtual memory.

You can see, to a degree, what SQL Server is doing with that memory. The most helpful DMV is probably sys.dm_os_memory_clerks. As the name implies, it shows how much memory is under control of all of the active mid-level memory allocators. If you add the pages_kb up, though, you don’t get the full memory footprint. Of course, the executing code needs space as well, and here’s where things get blurry. SQL Server stores its data in 8k pages, but it also uses those same pages to hold some system objects. So the question of what is used by the engine, by features, by cached data… it begs the usual PASS community response… it depends.

As a side note, what’s the least helpful DMV? That would be sys.dm_os_sys_memory. It doesn’t even work on Linux yet. That’s another Connect item.

What Needs To Be Done?

This is the question I really can’t answer. Could Microsoft give us the option to disable some features that don’t make sense on a compact system? How about full-text indexing? Would it really be necessary in this case? Ironically, even though you could disable it at one time, you no longer can. This leads me to believe there is the possibility that Microsoft has somehow made use of it elsewhere in the engine, which would kill it as a candidate. I wonder if that’s the case.

Another good candidate might be CLR. Once again, is this really necessary in a small system? And due to the inherent security vulnerabilities, this is a pretty isolated feature.

But wait! Before we go too far down that path, Microsoft has already figured it out! Check out the requirements for SQL Server 2016. Express Edition requires only 512mb!

I’m at a loss at this point. Does Microsoft have any plans to trim the Linux memory requirement? What did they do to allow Express to run so light? Can that strategy be applied? And, should they remove this barrier, what else is holding this up?

The most important question to me, though, is this: If you had a Raspberry Pi with SQL Server on it, what would you do with it? Comments are welcome!

I mentioned this was timely, though, didn’t I? Well, that’s because I’ll be giving my second ever PASS virtual session on Thursday, the 20th. I’m a part of the 24 Hours of PASS: Summit Preview 2017! The same session I gave to YooperPASS, A Guided Tour of the SqlClient Namespace, will also be part of this webinar event. Because I’ll be presenting this session to a much wider audience now, I’d like to expand on my approach to this session.

I mentioned this was timely, though, didn’t I? Well, that’s because I’ll be giving my second ever PASS virtual session on Thursday, the 20th. I’m a part of the 24 Hours of PASS: Summit Preview 2017! The same session I gave to YooperPASS, A Guided Tour of the SqlClient Namespace, will also be part of this webinar event. Because I’ll be presenting this session to a much wider audience now, I’d like to expand on my approach to this session.