They say the only three numbers in computer science are 0, 1, and N. Well, I’ll be at the SQL Saturday in Columbus, Ohio, on July 11th, giving a session entitled Playing in Traffic. It’s really a talk about keeping the wheels turning as a SQL developer when N gets really big. It’s the last session of the day, so expect things to be a bit laid back and mildly entertaining, but hopefully still very educational. See you in Columbus!

#OutrunCancer

A lot can happen in a couple of hours.

Right now, I am taking part in the OneAmerica 500 Festival Mini-Marathon. My start time is 8:15, and if I stay on pace I should cross the finish line around 10:30. But that’s not why I’m writing this.

I’m asking something of you. While I’m out there running this morning, I have a fundraiser going. It’s for the Children’s Cancer Research Foundation. Please donate here.

Maybe you know kids with cancer, like Ariana and William, and you know how important this research is. Maybe you’re a runner yourself and want to support a guy who’s clearing 13 miles for the first time today. Maybe you’re a fellow 500 fan and want to do some good as part of the Month of May. Whatever the reason, don’t think about it, don’t weigh options, just give.

Thank you for helping me #OutrunCancer.

Godspeed.

I <3 SET STATISTICS IO ON

Once upon a time, a senior DBA at the Salesforce Marketing Cloud had pointed out a very small performance tweak to me. I was curious about whether or not it still held true in SQL Server 2014, so I wrote up the sample below. What’s interesting to me is that a cursory examination of the Execution Plan doesn’t reveal the difference. Of course, if you dig deep enough, you’ll find it there. But it’s not obvious.

On the other hand, by using SET STATISTICS IO ON, I can easily see the difference. Hopefully, this isn’t some new earth-shattering development for you so much as a simple reminder to make use of this statistical information.

IF NOT EXISTS (SELECT 1 FROM sys.indexes WHERE object_id = OBJECT_ID(N'Person.Person') AND name = N'IX_Person_PersonType_EmailPromotion')

CREATE NONCLUSTERED INDEX IX_Person_PersonType_EmailPromotion ON Person.Person (PersonType, EmailPromotion);

SET STATISTICS IO ON; SET NOCOUNT ON;

SELECT BusinessEntityID, EmailPromotion

FROM Person.Person

WHERE PersonType = N'SC'

AND EmailPromotion IN (1, 2);

SELECT BusinessEntityID, EmailPromotion

FROM Person.Person

WHERE PersonType = N'SC'

AND EmailPromotion BETWEEN 1 AND 2;

SET STATISTICS IO OFF;

DROP INDEX IX_Person_PersonType_EmailPromotion ON Person.Person;

Table 'Person'. Scan count 2, logical reads 5, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Table 'Person'. Scan count 1, logical reads 3, physical reads 0, read-ahead reads 0, lob logical reads 0, lob physical reads 0, lob read-ahead reads 0.

Wondering what exactly these results mean? Look here: https://msdn.microsoft.com/en-us/library/ms184361.aspx

Wondering what the tweak was? Look closely at the IN versus the BETWEEN, and then at the number of scans and reads.

Here We Go Again

I’ll be presenting my session from SQL Saturday #358 at this month’s IndyPASS meeting next Tuesday, 3/17. I’m looking forward to all the heckling I’ll be getting from the Salesforce Marketing Cloud‘s performance DBA’s that will be in attendance.

Meeting attendees will also get an added bonus. I have a voucher for a free SQL Admin Toolset from Idera that will be part of the prize drawing. This was courtesy of Idera’s support of #358 in Albuquerque.

So, free pizza, the chance to heckle me, and the chance to win free software… what else could you ask for? See you Tuesday!

Subscriber Lists v Data Extensions

For those of you in the Indianapolis area who work with the Salesforce Marketing Cloud, you may be interested in Tuesday night’s Marketing Cloud Developers Group meeting. I will be presenting a session entitled “Subscriber Lists versus Data Extensions: Choosing the Best Option Every Time”.

[n]varchar truncation

I was very happy with the response to my SQL Saturday session in Albuquerque. To those who attended and provided me with feedback, thank you.

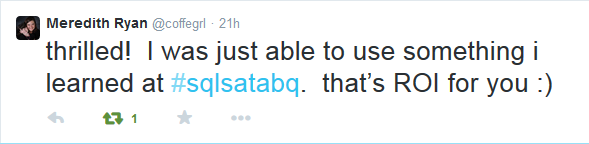

One of the items I covered led to this tweet from @coffegrl:

Which just made my day yesterday. Anyway, here’s what she was talking about. Have a look at this script:

DECLARE @stmt NVARCHAR(MAX);

SELECT @stmt = N'foo' + REPLICATE(N'A', 4000) + N'bar';

SELECT LEN(@stmt), LEFT(@stmt, 5), RIGHT(@stmt, 5);

What happens when you run it? Ideally, the results should be 4006, ‘fooAA’ and ‘AAbar’. But that’s not the case. Even though @stmt is NVARCHAR(MAX), the four components of the string concatenation (‘foo’, ‘A’, the results of REPLICATE, and ‘bar’) are not. As a result, the value that is constructed is, in this case, an NVARCHAR(4000), which is then written to @stmt.

Here’s one way to fix it:

DECLARE @stmt NVARCHAR(MAX);

SELECT @stmt = N'foo' + REPLICATE(CAST(N'A' AS NVARCHAR(MAX)), 4000) + N'bar';

SELECT LEN(@stmt), LEFT(@stmt, 5), RIGHT(@stmt, 5);

And another:

DECLARE @stmt NVARCHAR(MAX);

SELECT @stmt = N'foo' + CAST(REPLICATE(N'A', 4000) AS NVARCHAR(MAX)) + N'bar';

SELECT LEN(@stmt), LEFT(@stmt, 5), RIGHT(@stmt, 5);

And another:

DECLARE @stmt NVARCHAR(MAX);

SELECT @stmt = CAST(N'foo' AS NVARCHAR(MAX)) + REPLICATE(N'A', 4000) + N'bar';

SELECT LEN(@stmt), LEFT(@stmt, 5), RIGHT(@stmt, 5);

And the same is true for VARCHAR (assuming you replace the 4000’s with 8000’s). So, the lesson is, when concatenating large strings in SQL be careful that it doesn’t trunca

And So We Meet Again

This is not a technical topic, but one I find myself very passionate about. An article today at NPR brought it to the forefront. As my organization matures, like every organization, rampant movement is slowly replaced by more controlled but slower movement. Notice I used the word “movement”. As Hemingway said, “Never mistake motion for action.” What maturity in an organization often brings is a better ratio of action to motion. We do a better job of working on only those things that really matter, not going off on adventures that may never make their way to production. Or developing with a ready, fire, aim approach. But it also wraps that action in more red tape. It’s a trade-off that every organization encounters.

Anyway, meetings are a highly visible part of that red tape. And the article covers the topic pretty well. I just have one thing to add. It’s a little math that I’ve always kept in the back of my mind at every meeting. Here’s the formula:

Px = T * W

Or, Productivity Lost = Time of the Meeting X the number of Workers at the meeting.

If you are running a meeting for 6 knowledge workers for 2 hours, assume that you just lost 12 hours of productivity. Was that meeting worth those 12 hours? It might have been. But that’s the trade-off. Remember, everyone’s time is valuable.

So, if you have any control over meetings at your organization, please keep that formula in mind. I always do.

A-ha Moments

I’m preparing for my session at SQL Saturday #358 in Albuquerque next week, and something finally clicked. I’ll be adding another demo to my section on the differences between EXEC and sp_executesql now. Want to find out what it is? Then come to Albuquerque!

Gotta love those A-ha moments!

Albuquerque SQL Saturday

I’m excited to announce that I’ll be presenting “Fast and Furious Dynamic SQL” at SQL Saturday #358 in Albuquerque on February 7th, 2015. This will be my first time as a PASS presenter, so please save your best heckling and rotten vegetables for the occasion.

The session will cover various tips and tricks that are generally related to running sp_executesql from within a stored procedure. It will focus on performance, security, and maintainability.

Viewing the Plan Cache

I’m working on a script and wanted to know how SQL was caching the query plan. So I wrote this little snippet. The only real value I’m adding here is getting the XQuery correct to pull out the SQL itself.

DBCC FREEPROCCACHE; -- Run your query here SELECT qp.query_plan, qp.query_plan.value(N'(//@StatementText)[1]', N'NVARCHAR(MAX)') FROM sys.dm_exec_cached_plans AS cp CROSS APPLY sys.dm_exec_query_plan(cp.plan_handle) AS qp;

Of course, I don’t recommend that DBCC in a production environment. But in a dev environment, it makes things easy.